- Good Stuff -

- 3mins -

- 2,777 views

20-year-old Indian creates AI to translate sign language, live!

Engineering student, Priyanjali Gupta has developed AI software that translates sign language into words in real time.

20-year-old develops AI software to translate sign language into words in real time

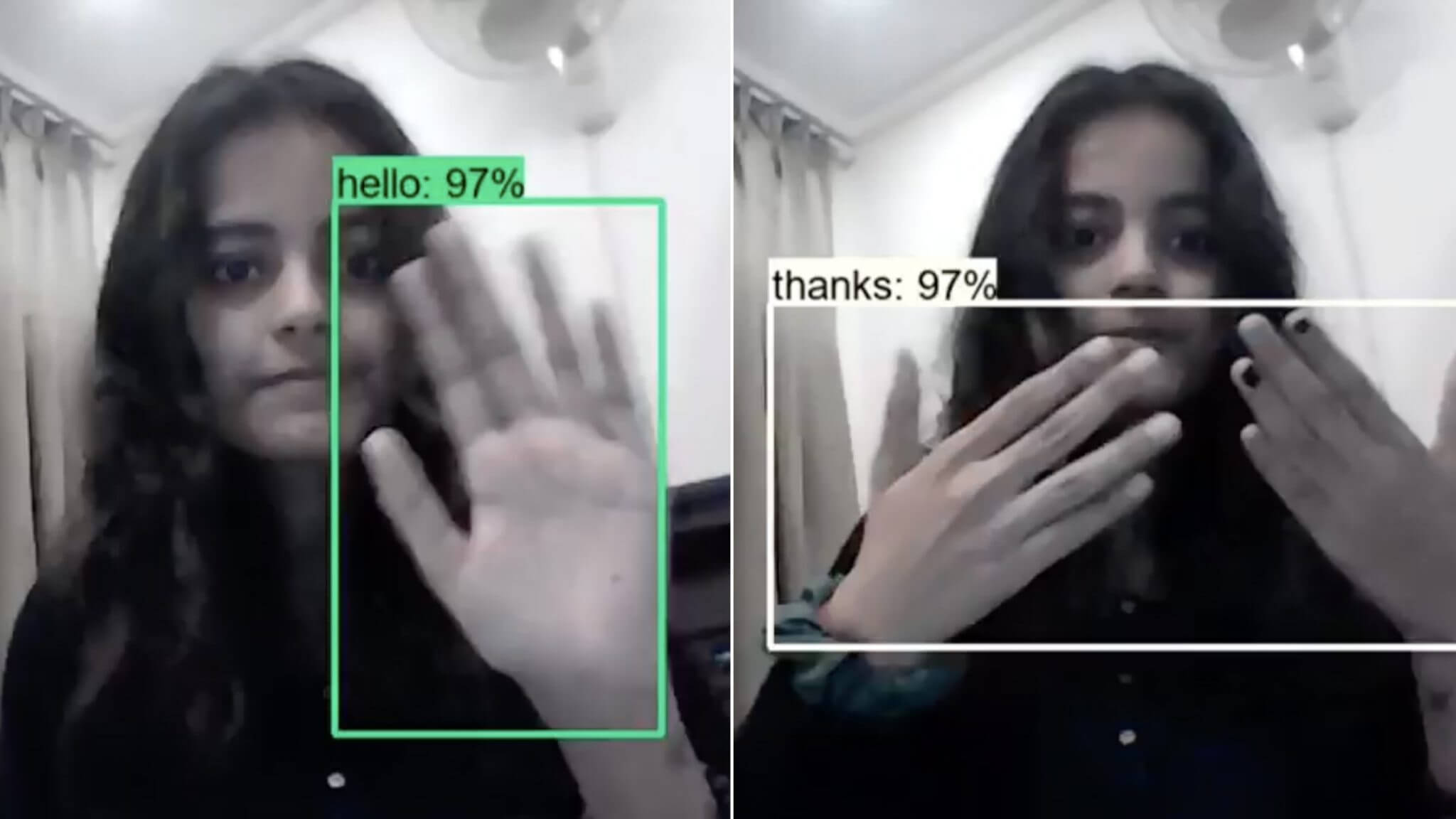

A 20-year-old Indian engineering student has used AI to develop software that translates sign language into words in real time. Priyanjali Gupta, a third-year student at the Vellore Institute of Technology in the state of Tamil Nadu (India), has developed software that uses a webcam and AI-based technology to identify the gesture of sign language and translate it instantly into words. For the moment, this innovative software only translates American Sign Language (each language signing differently). Available as open source, it is very likely that the scientific community will take up this innovation to duplicate it, adapt it to other sign languages and improve it. — Initiatives

Priyanjali, who shared the creation of her software on the LinkedIn platform, reaching over 66 000 likes, says: “I am self-taught with a keen interest in development and research. I look for opportunities to apply my technical knowledge to build things to solve current problems facing the world.” Inclusive technology is where the inventive future engineer started to develop this very promising project. Source: GitHub

AI model that turns sign language into English in real-time went viral on LinkedIn.

Gupta told Analytics India Magazine:

“I believe that we don’t have enough inclusivity in tech for the specially-abled people. What if the deaf and the mute want to access voice assistants like Google, Echo or Alexa? We really don’t have any solutions for that. I could also not stop thinking about one of my mother’s students who is hearing impaired and how it would be difficult for her to express her thoughts. I decided to make this small-scale model.

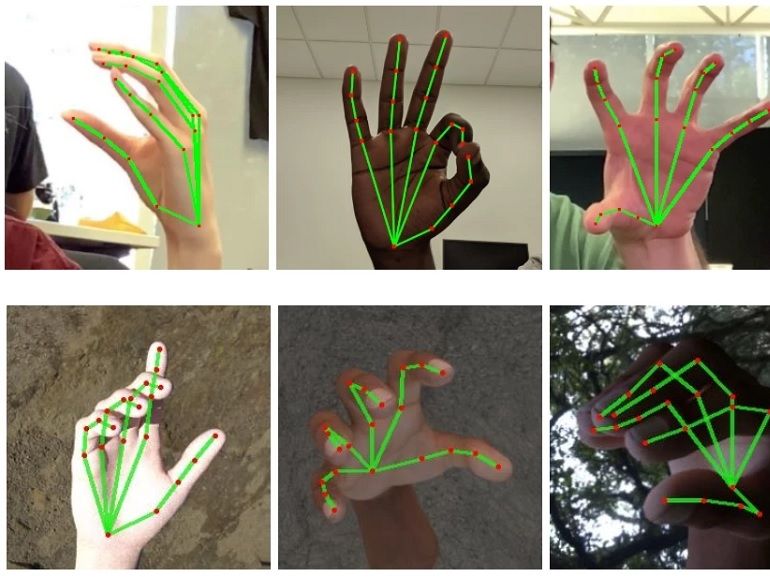

“This AI-powered model is inspired by data scientist Nicholas Renotte and is built using TensorFlow object detection API. It has many additional capabilities, which makes it a lot easier to build object detection models. I used a small data set of 250 to 300 images, gave the annotations manually, and used a pre-trained SSD model for accuracy. The code is written in Python, and I have run everything in Jupyter Notebook.

“I believe that the issue of understanding sign language is really big and hard, and a small-scale object detection model cannot solve it. I believe that sign languages include facial expressions, shoulder movements and many more things, and it requires a very well trained deep neural network to understand everything.

“There is research being done on it, and I believe that we will have a solution, maybe in the next three to five years—not the perfect solution, but certainly the one that can be implemented in real life and has the flexibility to be tuned with advancing technology. With my solution going viral, I believe that it can make experts and professionals think more about the issue and find better solutions to improve inclusivity.”

After graduation, Gupta plans to work for two years to understand how real world problems are being solved, how things work, how companies function, and how people manage their businesses; then pursue her master’s degree.

A post about her AI model that turns sign language into English in real-time went viral on LinkedIn, and the source code is available on GitHub. The above comments by Priyanjali Gupta are from a longer interview in Analytics India Magazine.

Source: AnalyticsIndiaMagazine

The system successfully converts sign language gestures into text by analysing the movements of several body parts, such as the arms and fingers. The image recognition technology that the student used is based on the digitisation of the sign language of several people. This AI software will provide a dynamic way to communicate with people who are deaf or hard of hearing, in real time. Source: Initiatives.media

To begin with, it can convert the movements of about six gestures – Yes, No, Please, Thank you, I love you and Hello. A lot more data is needed to create a more complete model, but as a proof of concept, the system works. Priyanjali Gupta is currently investigating the use of long-term memory networks, an artificial recurrent neural network, to integrate multiple images into the software. Source: Initiatives.media